Leveraging LLM Powered Chatbots For Next Generation Customer Support

In today’s fast-paced, customer-centric business environment, embracing AI-driven solutions has become imperative for staying competitive. Customer support is an area where AI adoption, particularly the rise of Large Language Models (LLMs) and Generative AI (GenAI), is creating transformative opportunities. These advanced models enable organizations to harness the power of natural language processing, delivering an unprecedented ability to understand and respond to customer queries efficiently. By leveraging LLMs like GPT-4, businesses can analyze conversations in real time, extract relevant information, and generate helpful responses. This evolution dramatically improves organizational efficiency by reducing repetitive tasks, freeing up human agents to focus on more complex issues. Overall, the strategic implementation of AI can enhance customer satisfaction, create consistency in service, and ultimately drive greater brand loyalty.

Benefits of LLM-Powered Chatbots in Customer Support

One of the primary benefits of LLM-powered chatbots is their ability to provide personalized responses and significantly reduce response times. Unlike traditional rule-based systems that rely on predefined responses, these advanced chatbots can understand nuanced customer queries, tailor their responses based on conversation history, and offer proactive support. They are also highly scalable, capable of handling thousands of queries simultaneously without compromising the quality of responses. This scalability ensures that high-volume customer queries can be addressed promptly, providing quick resolutions and boosting customer satisfaction. Moreover, these chatbots seamlessly integrate with existing support channels, such as social media, emails, and phone systems, to provide a cohesive and consistent customer experience across all touchpoints. In doing so, they effectively complement the human support team by handling routine tasks while ensuring critical issues receive prompt attention.

A Framework for Implementing LLM-Powered Chatbots

Initial Assessment: Understanding Business Needs and Customer Expectations

Before implementing an LLM-powered chatbot, conducting a thorough assessment of business needs and customer expectations is crucial. Organizations should analyze their customer support landscape to identify common pain points, frequently asked questions, and areas where human agents often struggle. This understanding helps define the specific goals and performance metrics that the chatbot should meet, whether it’s reducing response times, improving first-contact resolution rates, or enhancing customer satisfaction. Additionally, it is essential to evaluate customer demographics and preferences to customize the chatbot’s language style, tone, and approach. This assessment phase ensures that the final solution is relevant and genuinely addresses the unique challenges of the organization.

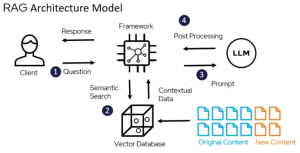

Understanding RAG Architecture

Retrieval-Augmented Generation (RAG) architecture is central to creating efficient LLM chatbots. It integrates two components: a retriever that searches external databases for relevant information and a generator that formulates responses using a pre-trained language model like GPT-4. This architecture allows the chatbot to deliver highly accurate, contextually rich answers. The retriever component searches through the company’s knowledge bases or documentation, while the generator fine-tunes responses to specific queries. This setup is crucial in providing precise answers while reducing hallucinations (fabricated answers) from the LLM.

Selecting the Right Platform: Why ChatGPT APIs and OpenAI Embeddings API Stand Out

Choosing a robust platform is fundamental to successful implementation. OpenAI’s suite, including the ChatGPT APIs and Embeddings API, provides comprehensive tools that can be adapted to various needs. The ChatGPT APIs offer seamless integration and customization of conversational AI directly into existing systems, supporting real-time interactions and customer engagements. Meanwhile, the OpenAI Embeddings API enhances retrieval by providing high-dimensional vector representations of data, significantly improving search and information retrieval. This combination allows developers to customize the chatbot’s responses to align closely with the organization’s specific knowledge base, delivering fast and accurate information.

Vector Database Selection: Pinecone vs. Postgres Vector

An essential component of the retrieval system is the vector database, which stores high-dimensional embeddings for quick data retrieval. Two popular options include Pinecone and Postgres Vector (PGVector). Pinecone is a managed service specializing in high-performance, scalable indexing for large datasets. It offers rapid, low-latency search with minimal maintenance requirements, making it an ideal choice for large enterprises. On the other hand, Postgres Vector extends the PostgreSQL database with vector search capabilities. It is suitable for teams already utilizing PostgreSQL, providing more flexibility and reducing overheads related to adopting new infrastructure. Choosing the appropriate database depends on organizational scale, performance requirements, and existing infrastructure.

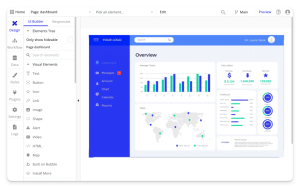

Building a User-Friendly Front-End with No-Code Platforms (e.g., Bubble.io)

Once the backend and architecture are in place, building a user-friendly front end becomes critical for maximizing user adoption and satisfaction. No-code platforms like Bubble.io enable businesses to develop intuitive interfaces quickly without needing extensive coding skills. With visual drag-and-drop editors and pre-built elements, teams can customize chatbot UI and UX based on branding guidelines while ensuring seamless integration with backend services. These platforms facilitate rapid iteration, enabling organizations to refine chatbot designs and workflows in response to user feedback. Ultimately, this leads to a responsive, dynamic chatbot that aligns with customer support goals and enhances the overall customer experience.

Beyond Customer Support: By-products of LLM-Powered Solutions

The application of LLM-powered solutions transcends traditional customer support, delivering significant by-products that streamline content creation and internal processes. One notable by-product is the automated generation of Knowledge Base (KB) articles. By analyzing customer interactions and identifying frequently asked questions, LLMs can automatically generate detailed KB articles for both internal and external use. These articles provide valuable insights and comprehensive answers to common customer concerns, reducing the workload on support teams and empowering customers with self-service options. The internal teams also benefit from having up-to-date documentation at their fingertips, which can expedite issue resolution and enhance overall operational efficiency.

Another benefit is the generation of technical blog posts that can be used for website content marketing. Leveraging data from customer queries, product usage patterns, and support tickets, LLMs can craft blog posts that are not only informative but also strategically aligned with customer interests. These posts can showcase new features, provide solutions to common problems, or highlight innovative use cases of the company’s products. This automated approach helps marketing teams maintain a consistent content pipeline, engage audiences with relevant information, and strengthen the brand’s thought leadership.

Generating FAQs based on real customer queries is another area where LLM-powered chatbots shine. By mining historical support data, these systems identify recurring themes and questions that can be compiled into accurate and concise FAQ sections. These FAQs provide immediate value to customers, helping them find answers quickly without needing direct assistance. This proactive approach reduces the strain on support agents and creates a comprehensive resource that reflects the actual needs and challenges of customers. In turn, this leads to higher satisfaction rates as customers gain swift access to reliable information that addresses their specific concerns.

Ultimately, these by-products of LLM-powered chatbots offer organizations multi-faceted benefits. They reduce the burden on support teams, facilitate better customer engagement, and help establish more efficient, content-rich ecosystems that resonate with target audiences.

Conclusion

In conclusion, embracing LLM-powered chatbots is pivotal in transforming customer support into a more efficient, responsive, and personalized function. Their ability to deliver rapid, accurate answers, streamline repetitive tasks, and seamlessly integrate with existing systems makes them an invaluable asset for businesses striving for excellence in customer satisfaction. As AI integration continues to evolve, we will see future trends like even more refined personalization, predictive analytics, and deeper integration with other enterprise systems. These advancements promise a landscape where chatbots not only support but proactively enhance the customer journey. Now is the time for organizations to assess their current customer support models and strategically implement these technologies to unlock new levels of efficiency and satisfaction, driving long-term success and customer loyalty.